This article explores using Airflow 2 in environments with multiple teams (tenants) and concludes with a brief overview of out-of-the-box features to be (potentially) delivered in Airflow 3.

TLDR;

Airflow 2 does not natively support multi-tenancy. For strong isolation, it's recommended that each team be provided with its own separate Airflow environment. If you're deploying Airflow, you can share some resources while maintaining isolation. Additionally, workarounds allow multiple teams to use a single Airflow instance, but these solutions typically require trust between users and may not offer complete isolation (it is not entirely possible to prevent users from impacting one another).

Airflow 3 is expected to eventually support multi-team deployment, as the relevant AIP-67 has been accepted, but the specifics are not yet fully defined or implemented.

Introduction

In environments with multiple teams, companies need a single deployment of Airflow, where separate teams in the company structure have access to only a subset of resources belonging to the team. They mainly want to reduce costs and simplify management compared to maintaining multiple separate deployments. While Airflow is a robust platform for orchestrating workflows, its architecture needs adaptation to support diverse team needs, access controls, and workloads.

A thorough review was conducted across various sources, including PRs, GitHub issues and discussions, Stack Overflow questions, articles, blog posts, official Airflow documentation, and presentations from the Airflow Summit. The aim was not only to explore the possible setups of Airflow for multiple teams but also to identify best practices, examine how other organizations are solving this problem in real-world scenarios, and uncover any potential "hacks" or innovative approaches that could be applied to enhance the solution.

A sincere thank you to all the Airflow community members and everyone who provided thoughtful feedback to help shape this document!

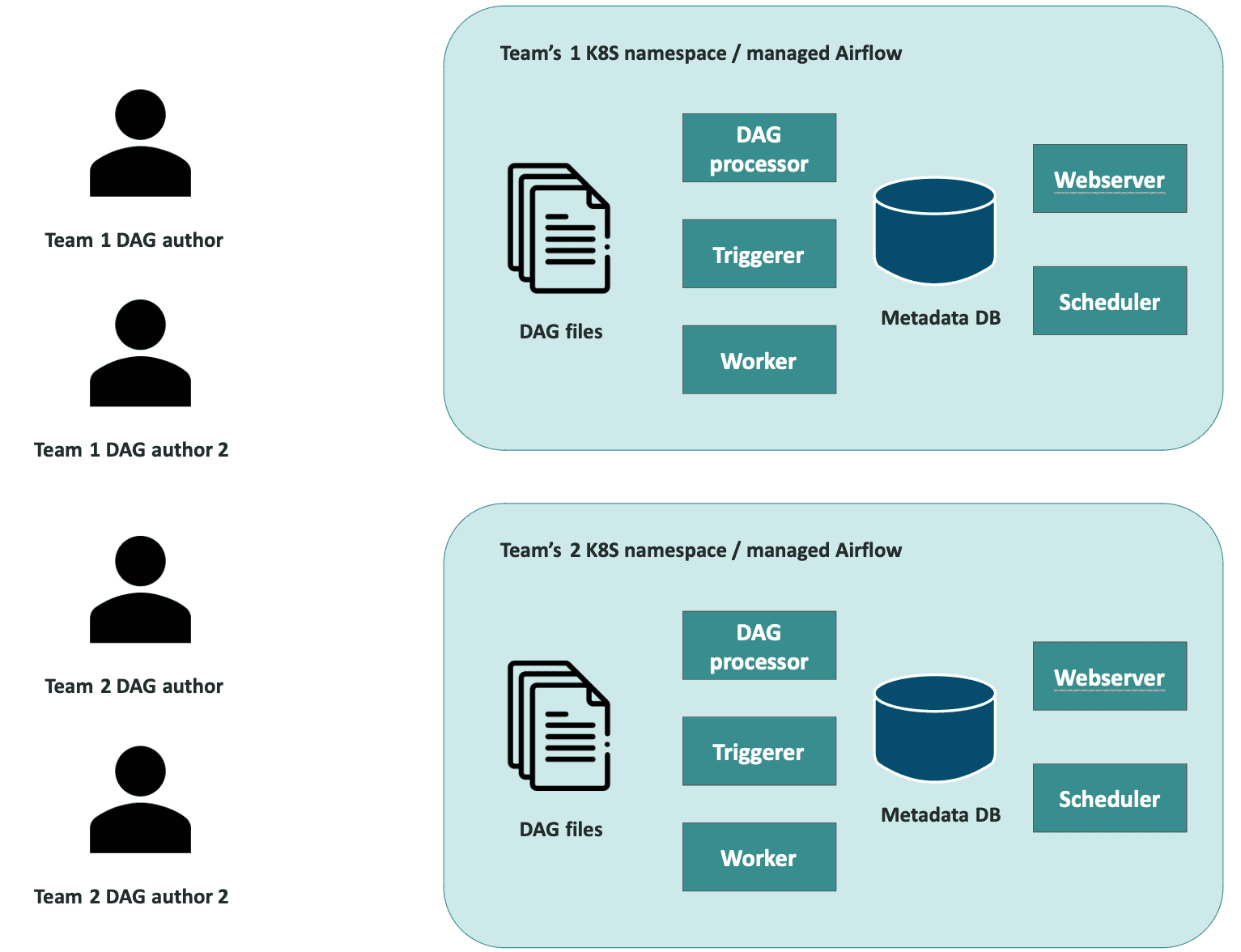

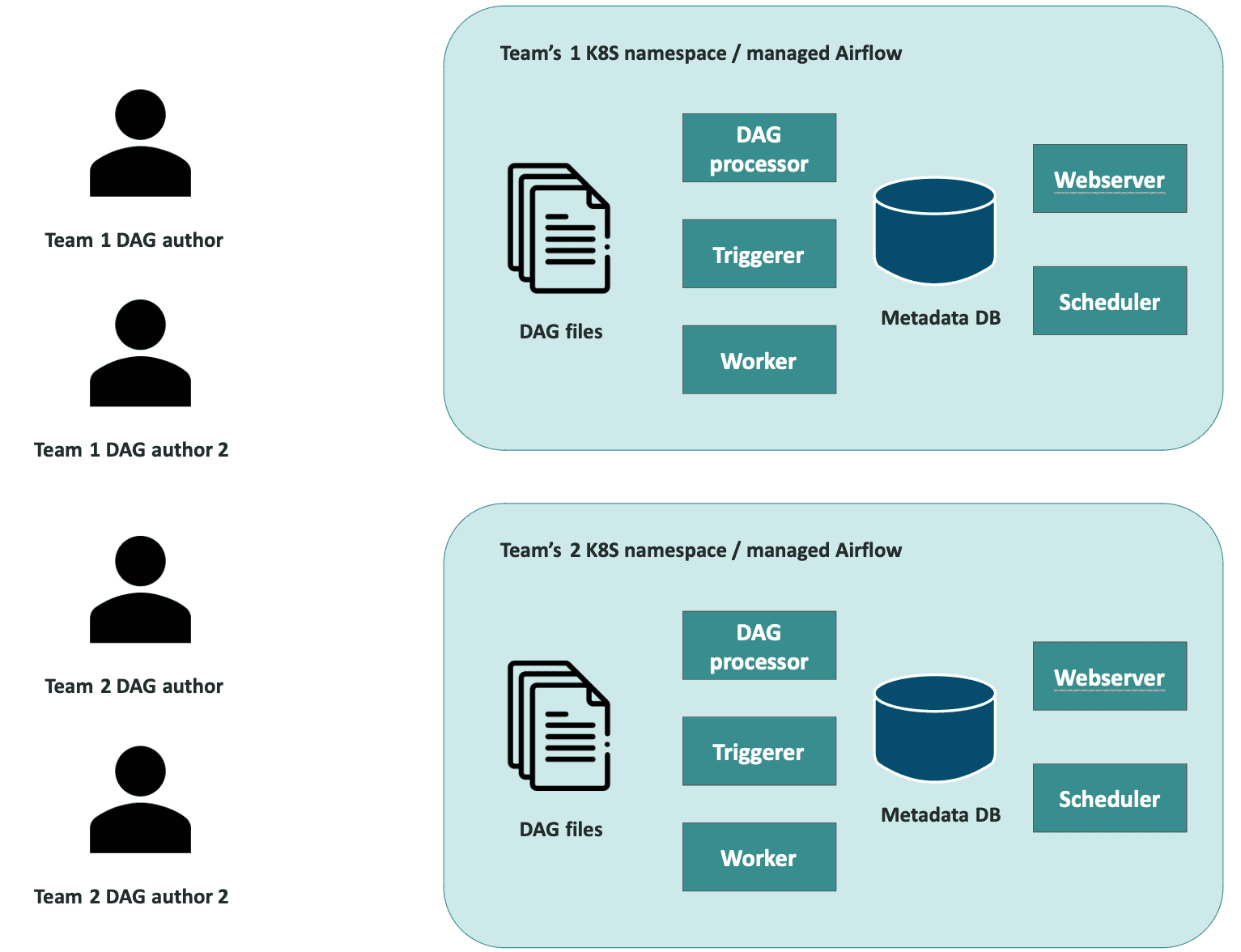

Separate Airflow deployment per team

This is the only approach that offers full isolation.

Description

Each team has its dedicated Airflow instance, running in isolation from other teams. This solution involves deploying a complete instance of Airflow for each team, including all required components (database, web server, scheduler, workers, and configuration, including execution environment (libraries, operating system, variables, and connections)).

With self-managed Airflow deployments, some resources can be "shared" to reduce infrastructure costs, while keeping Airflow instances team-specific, e.g., Airflow's metadata database for each Airflow instance can be hosted in a separate database schema on a single database server. This "shared database" approach ensures full isolation from the user's perspective, though resource contention might occur at the infrastructure level. It's important to note that this setup is better suited for self-managed Airflow deployments - it requires control over the environment configuration, which is typically not feasible with managed services like Google Cloud Composer, AWS MWAA, or Astronomer. Managed services usually provide a single database instance per Airflow environment, making creating separate schemas for different teams impossible. Additionally, managed Airflow services often require a separate, dedicated Kubernetes cluster provisioned by the platform, which means users cannot deploy Airflow on a cluster of their choice, further limiting flexibility and customization.

Setup

Provision multiple Airflow environments according to your organization's policies, whether deploying via a Helm chart or using a managed service like Composer. These options offer enough flexibility in configuration to ensure that each team's environment is tailored to their specific needs, from resource allocation to custom execution environments.

When self-hosting Airflow, e.g., on Kubernetes (not using managed Airflow services), a separate namespace should be created within the Kubernetes cluster for each Airflow instance. If sharing a database server, the database for each Airflow instance should be hosted in a separate database schema. One can also consider using the same Kubernetes Clusters to execute workloads of tasks (check out Kubernetes Executor).

Pros

- Complete Isolation: Each team has its own environment, preventing security risks.

- Custom Configurations: Teams have full control over configurations and library versions.

- Independent Scaling: Teams can scale their Airflow instances based on their workload needs.

Cons

- Higher Cost: Separate managed Airflows will be more expensive than a single one of the same size, but self-managing Airflow (e.g., on K8s) allows more cost optimization, like sharing a database server, to reduce infrastructure overhead.

- Operational Overhead: Managing multiple deployments or sharing some resources increases the infrastructure burden, often requiring platform engineers to spend time on setup, monitoring, and optimization.

- Inconsistent User Experience: Different setups across teams can make troubleshooting and collaboration harder.

- Noisy Neighbor: One environment may consume an excessive share of shared cluster resources, potentially degrading the performance of other environments. Proper resource management and isolation are required to mitigate these issues.

- (For setup with shared database) Resource Contention: Shared resources can lead to performance issues if not managed properly.

Best For

- Teams working with sensitive data (medical, financial, etc.)

- Large organizations where teams have vastly different workloads, security concerns, or compliance requirements.

- Environments where teams need complete control over their Airflow configurations or have complex workflows requiring custom dependencies.

- Scenarios in which no user trust can be expected, and no agreements or shared governance can be established between teams necessitate strict isolation of resources and environments.

- (For setup with shared database) Organizations seeking cost efficiency with platform engineers capable of handling setup and maintenance.

Possible challenges

A single authentication place is needed for multiple Airflow environments

Authentication can be streamlined across these multiple instances. With features like Airflow’s Auth Manager, a centralized authentication proxy, such as Keycloak, can provide unified access to all web server instances. This allows for simplified authentication management across teams with a single sign-on (SSO) experience and consistent URL structures for accessing the UI.

Managing multiple deployments is consuming too much time and effort

Leveraging Airflow’s Helm chart simplifies Kubernetes orchestration by automating deployment, scaling, and configuration across environments. Additionally, using infrastructure-as-code (IaC) tools like Terraform enables consistent, repeatable infrastructure setups, making it easier to manage infrastructure changes and track versions. Implementing centralized logging, monitoring with tools like Prometheus or Grafana, and automated backups further helps streamline operations and reduces the overhead of maintaining multiple Airflow instances.

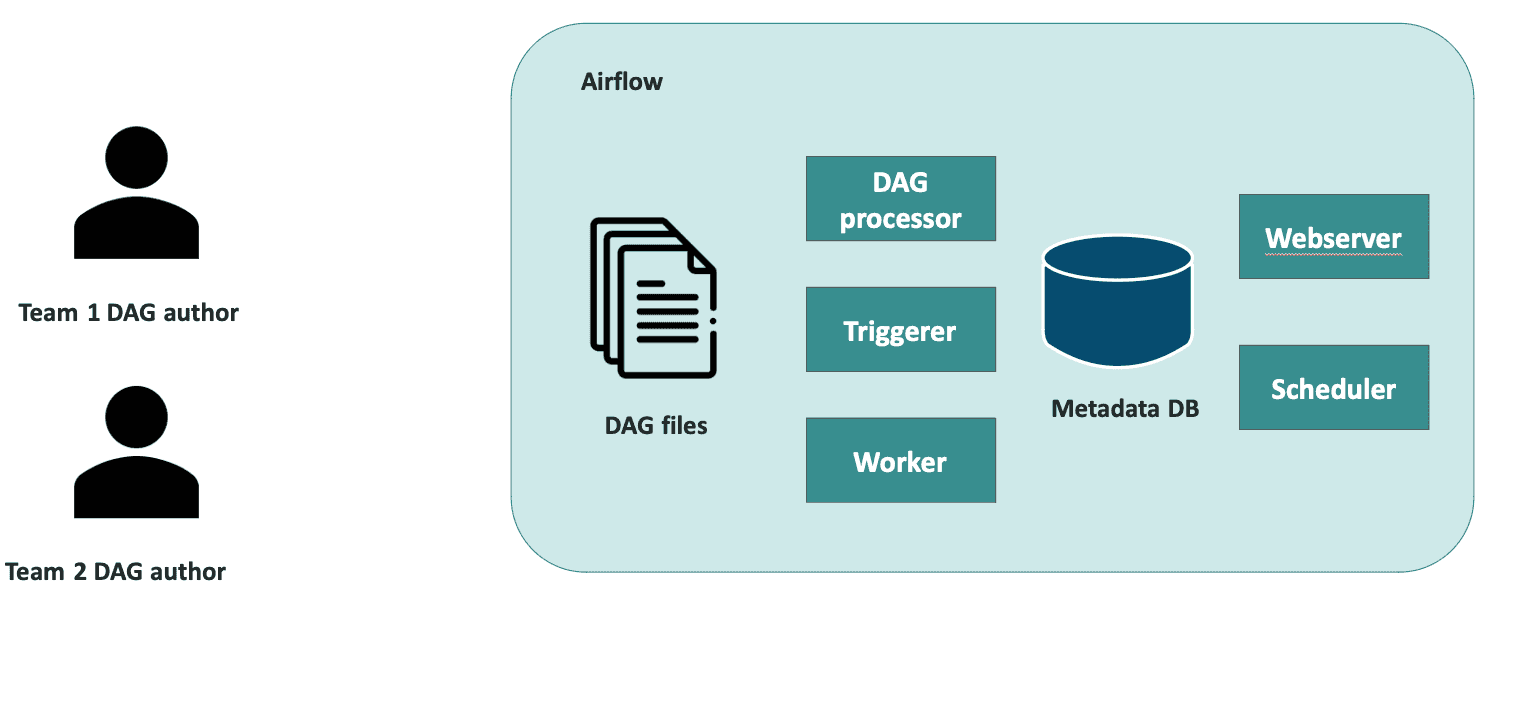

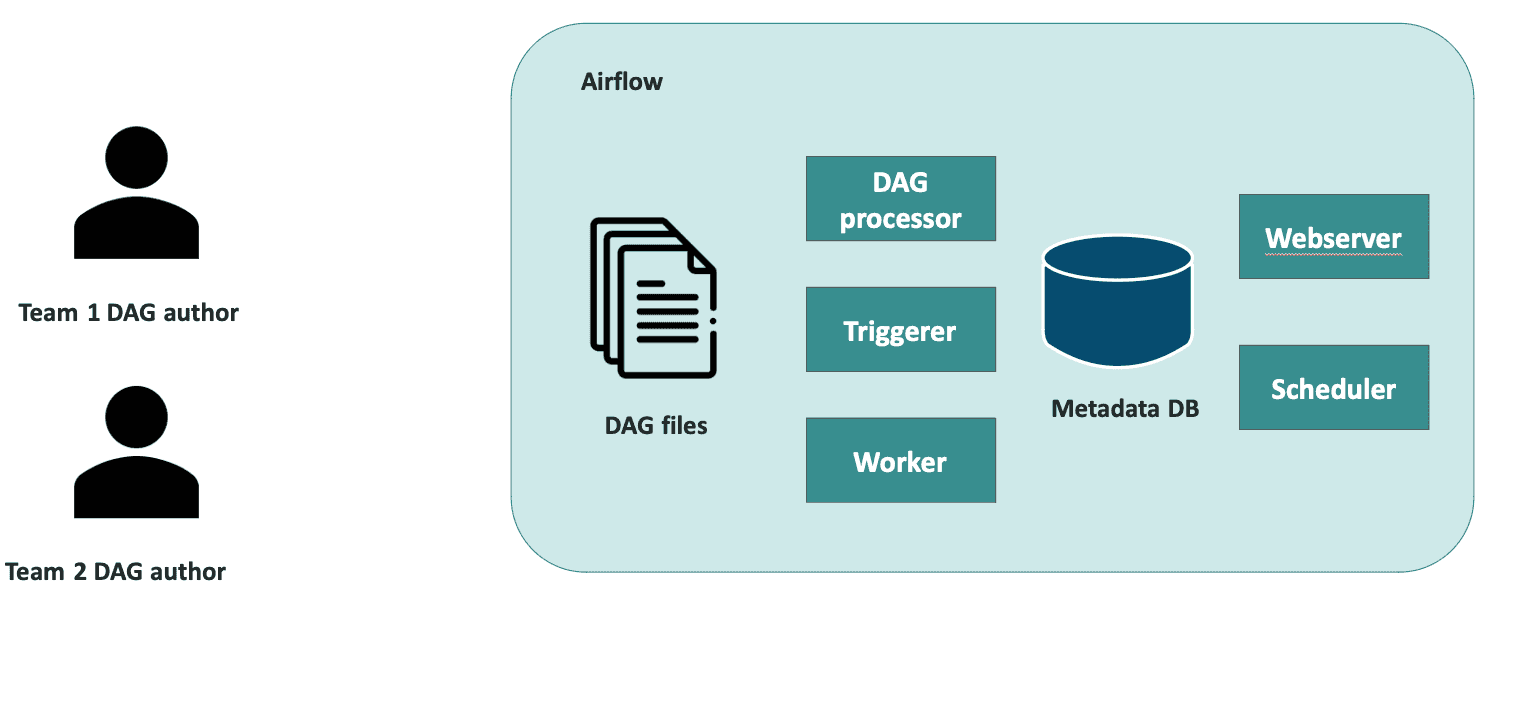

A single instance of Airflow for all teams

This solution is ideal for prioritizing cost-efficiency and simplicity over strict team isolation. Managed Airflow services like Google Composer or AWS MWAA align well with this approach since they're optimized for running a single, shared Airflow instance.

Description

A single Airflow instance is shared across multiple teams, with all resources (database, web server, scheduler, and workers) being used collectively. While each team can have separate execution environments, task queues, and DAG processing configurations, they still operate within the same core infrastructure, especially the database. This lack of true isolation introduces potential risks, as one team’s actions could inadvertently impact another’s workflows or configurations.

Important disclaimer

Achieving full tenant isolation with a single Airflow deployment is NOT possible out-of-the-box because each Airflow environment uses a single database, and Airflow lacks fine-grained access control for the database. As a result, there is no built-in mechanism to prevent DAG authors from making changes to or deleting any database contents, including altering the state or return values of other DAGs or altering/removing DAGs created by different teams and access to critical elements like Connections, Variables, and the overall metadata store. There is no way to fully prevent a malicious actor from one team from impacting another, as each DAG author has unrestricted access to the entire database, and limitations are hard to effectively implement.

Some control can be enforced through custom CI/CD checks or by imposing user restrictions, such as using DAG factories to limit unauthorized operations in the code. However, this approach shifts the access control issue elsewhere, requiring additional external implementations. For example, while CI/CD might validate that DAGs access only permitted data, it raises a new question: who oversees and approves the CI/CD process itself?

Setup

Setting up a shared Airflow instance for multiple teams involves configuring Airflow to handle multiple execution environments, with the potential need to manage multiple task queues depending on specific requirements. This can be done using Cluster Policies to enforce separation between team workloads and operators, such as setting team-specific Kubernetes Pod Templates or Celery queues. Separate node pools should also be used to avoid performance bottlenecks.

Managing user roles and restricting access to specific DAGs or Airflow components in the UI can be done with built-in Airflow mechanisms. However, because the database and core resources are shared, you must carefully manage permissions and configurations to prevent teams from interfering with each other's workflows (see the `Important disclaimer` above).

Teams can utilize different sets of DAG file processors for each team's DAGs folder in the Airflow DAG folder. Multiple dag processors can be run with a –subdir option.

Pros

- Cost-Efficient: All resources are shared, reducing infrastructure costs.

- Simplified Management: It is easier to manage since only one instance of Airflow exists.

Cons

- Security Risks: Shared access to Airflow's internal database (and possibly variables or connections) offers no isolation and can lead to unauthorized team interference.

- Performance Bottlenecks: When not correctly managed and separated, with many teams sharing the same resources, heavy workloads can create resource contention and slow down the system.

Best For

- Organizations with smaller teams or lighter workloads that can tolerate resource sharing.

- Teams that are highly collaborative and don’t require strict isolation.

- Teams with moderate workloads where shared resources won’t cause significant performance issues.

- Cases where reducing infrastructure costs is more critical than separating the entire team.

Possible challenges

Strong teams/tenants isolation is needed

Currently, the only way to provide strong isolation is for each team/tenant to have their own instance of Airflow that does not share any resources with other Airflow instances. This is because, in Airflow 2, there is no built-in mechanism that can prevent a dag author from accessing and altering anything in the DB.

Users need to authenticate

Managed Airflow (Composer, MWAA, Astro, etc.) usually takes care of authentication for you and it's available out of the box. By default, Airflow uses Flask AppBuilder (FAB) auth manager for authentication/authorization so that you can create users, roles and assign permissions. You can also easily provide your own auth manager when needed.

Only selected people should be able to view and run specific DAGs

Airflow has a mechanism for access control that lets you decide what DAGs a user can see, edit, delete, etc., but it has some limitations that make it possible to prevent such a scenario only on the UI level.

In more detail, Airflow UI Access Control only controls visibility and access in Airflow UI, DAG UI, and stable API in Airflow 2. Airflow UI Access Control does not apply to other interfaces that are available to users, such as Airflow CLI commands. This model is not enforced in the DAG and tasks code. For example, you can deploy a DAG that changes Airflow roles and user assignments for these roles.

One team can only access connection / variable that belongs to them

As mentioned above, Airflow does not have a mechanism to enforce it. Airflow trusts the DAG authors, so any variable or connection can be easily accessed / altered / or deleted from within the DAG.

In such cases, using an external secrets backend or implementing a custom solution, such as assigning a separate Service Account (SA) for each DAG ID and requiring DAG authors to request access to that specific SA, can help achieve better isolation and security.

It's also worth considering establishing conventions and a mutual trust agreement between users, ensuring that even though they technically have access to each other's secrets, they agree not to misuse this access. In the end, this approach can make setup and maintenance easier, while significantly reducing costs by sharing a single Airflow instance across multiple users or teams.

Some control can also be enforced through custom CI/CD checks. However, this approach shifts the access control issue elsewhere, requiring additional external implementations. For example, while CI/CD might validate that DAGs access only permitted connections, it raises a new question: Who oversees and approves the CI/CD process itself?

One team should not be able to overwrite the DAGs of another team (e.g., by naming them the same)

There is no built-in Airflow mechanism designed to prevent that scenario from happening.

One option is to have some CI/CD checks. Another option is to use Airflow's cluster policies that can verify, e.g., if dag is coming from a specific directory (using dag.fileloc) and adheres to some predefined rules (e.g., dag_id starts with the name of the directory that the team has access to). One team will not be able to overwrite the DAG of another team with such validation in place. Cluster policy can either skip such a DAG or change its dag_id (it can break workflows that trigger DAG based on dag_id).

One team should not be able to view / access the DAG files of another team

Apart from implementing some access policies in the UI that prevent the team from seeing anything but their own DAGs, some access policies to the files themselves must be implemented. Whether you are using standalone or managed Airflow, in the dags/ directory, you can create subdirectories for each team and only grant them permissions to their own directory. Airflow should then process all the files from the dags/ folder and all its subdirectories.

Invalid single DAG definition from one team should not break DAG processing or scheduling for everyone

The scheduler and dag_processor processes should be run separately. For high availability, you can run multiple schedulers concurrently. You can also run multiple dag processors, e.g., one per team directory (with a –subdir option).

One team should not be able to use another team's permissions

Each team can use its own Service Account (SA) key, mounted on Kubernetes. Even when secrets are mounted manually, it is necessary to prevent the DAG author from choosing the “wrong” executor. You can utilize the pod_mutation_hook to dynamically modify and append necessary configurations to worker definitions during runtime, preventing users from tampering with the worker setup at the DAG level. We can also enforce this restriction by using DAG factories or CI/CD checks that ensure the team uses only its own secrets and connections or conducting code reviews to verify compliance.

Alternatively, you can configure a separate set of workers (and DAG processors) for each team, assigning them to specific queues. A combination of cluster policies and DAG file processors with subdirectory organization can limit DAGs from one folder to specific workers. While not completely foolproof, this method restricts queues and enforces some separation.

DAGs/processes of one team may need to read another team's data

Grant the Service Account of one team permission to access only the specific presentation layer of the other team’s data. This ensures controlled and intentional data sharing.

Managing secrets on K8s, mounting specific secrets manually to K8sExecutor

Instead of relying on Airflow Variables or Connections, which lack fine-grained permissions, sensitive information can be stored and managed using Kubernetes secrets. These secrets can be manually mounted and tied to specific teams. However, additional security checks should be implemented in the deployment process to ensure that secrets are only accessible to their respective teams.

How others solve multi-team problem

Recommendations from providers of managed Airflow services

Astronomer recommends providing separate Airflow instances (url1, url2, url3)

Google provided a list of pros and cons of single-tenant and multi-tenant Composer (url)

Amazon recommends providing separate Airflow instances but provides some security steps when using a single Airflow for multiple teams (url)

- Use separate environments for each team.

- Use CI/CD validation and validate DAG files before loading them to the final DAG folder.

- Use DAG factory (or YAML, JSON DAG definition) so that users do not write actual DAGs.

- Use external secret managing service to overcome Airflow limitations in RBAC.

Real-World Examples

Disclaimer: This analysis is based on publicly available information about how companies are deploying Airflow, such as recordings, blog posts, and other shared materials. It does not imply that the described practices represent the entirety of their deployment strategies.

Custom solution built on top of Airflow (single or multiple instances)

Apple (url)

- Created custom interfaces and layers to handle tasks like authentication, version control, and integration with secret management systems. [1:05]

- Have replaced direct database access (e.g., for XCom) with custom Web Service and API calls. [2:35]

- Is safeguarding the scheduler from any malicious plugin while allowing the use of custom operators and code. [5:20]

Adobe (url)

- Introduced a JSON-based DSL for the users with their custom solution, that converts the definitions to Airflow DAGs.

- Is validating everything (code correctness, permissions, etc.) within the custom solution.

- After encountering scalability issues, set up multiple Airflow clusters with abstraction on top of it so that users remain agnostic of this.

A single instance of Airflow for all teams…

Separate Airflow deployment per team

Balyasny Asset Management (BAM) (url)

- Runs multiple Airflows on Kubernetes, everything is containerized (Airflow tasks, environment, etc.) and runs using KubernetesPodOperator [4:35]

- Every pod is one Airflow task, and each team is responsible for the pod configuration [8:52]

- Using a variation of Helm Charts, but every team gets their own separate Airflow [17:29]

- Moved to a hybrid approach, some teams use managed Airflow some stay on company managed [21:17]

Delivery Hero (url [11:20])

- Within each Airflow, built-in RBAC is used to provide DAG-level access, configured through YAML files.

DXC Technology (url)

- Runs multiple Airflows on Kubernetes, though it’s unclear if resources are shared.

Fanduel (url [7:55])

kiwi.com (url)

- Migrated from a self-managed monolith to around 60 smaller environments on Astronomer.

- Using Okta for authentication and authorization across all Airflow deployments.

- Access is assigned at the Airflow instance level, with no finer control.

- GCP Workload Identity is heavily utilized.

Shopify (url, lessons learned blog post)

- Isolated Airflow instances run in separate K8s namespaces, though some resources seem shared. [7:50]

- They use dag_policy to block non-compliant DAGs and limit resource usage, ensuring separation in a shared environment. [8:45]

- Management of connections, variables, etc., begins at [13:30].

Snap (url)

- Using GKE with multiple namespaces, spawning Airflow instances on demand. [9:47]

- No credentials are stored on disk or in the Airflow database; custom UI elements are added to their IAM manager. [13:01]

- DAGs are isolated by assigning each DAG an exclusive Service Account, allowing users to request resource permissions for the SA without direct access themselves. [17:06]

What Most Companies Choose

Based on publicly available resources, real-world implementations of multi-team Airflow deployments vary widely. However, the majority opt for separate Airflow environments, typically deployed on Kubernetes or managed services for better isolation and scalability.

While some companies may be using a single Airflow instance as the foundation, restrict direct user access through custom interfaces or DSLs. This approach enforces team separation at an application level, enabling granular control that Airflow alone cannot provide. However, this method often involves building an entirely separate application layer that merely leverages Airflow as a backend, requiring significant effort to develop and maintain over time.

Others adopt a hybrid approach, sharing a single Airflow instance for less critical teams while providing separate, isolated deployments for more sensitive or high-impact workloads. This approach enables cost savings when trust between users can be established while balancing resource efficiency with the need for strict separation of critical operations.

These diverse strategies highlight the trade-offs between isolation, operational complexity, and budget constraints, providing valuable lessons for designing Airflow solutions in multi-team settings.

Airflow 3

Important disclaimer

Although work on Airflow 3 is underway, and some AIPs have been accepted, details still need to be resolved. While key concepts have been agreed upon, there's no guarantee that everything will be implemented exactly as planned or described in this document.

AIPs and ETAs

The main AIP for multi-team setup in Airflow is AIP-67 (but inside it, you can find the list of other AIPs that are making it possible, e.g., AIP-72 that replaced AIP-44 and will provide fine-grained access to db only via Task SDK)

You can track work progress on Airflow 3 here, but what's important to note is that the AIP-67 is NOT scheduled to be delivered in 3.0 but somewhere later.

Some bullet points from AIP-67 (what most probably will be possible in the future):

- defining team-specific configuration (variables, connections)

- execute the code submitted by team-specific DAG authors in isolated environments (both parsing and execution)

- allow different teams to use different sets of dependencies/execution environment libraries

- allow different teams to use different executors.

Summary

Choosing the right Airflow setup requires careful consideration of your team's needs for isolation, resource management, and collaboration. In its current form, Airflow 2 lacks native multi-tenancy support, meaning that organizations looking for strong separation between teams should ideally provide each team with its own dedicated Airflow environment. This approach ensures that issues or failures within one team's workflows don't impact others. However, if resource sharing is a priority, there are ways to deploy Airflow such that teams can share an instance, though this comes with trade-offs in terms of security and isolation. Trust between teams is essential in such setups, as preventing one team's actions from affecting another is difficult.

Looking ahead, Airflow 3 promises better support for multi-team environments. This forthcoming feature could streamline deployment for organizations managing multiple teams, though the implementation details are still evolving. When designing your Airflow architecture, it's important to weigh current limitations against future capabilities.

Additional resources

Airflow Summit talks about multi-tenancy in Airflow

Airflow docs

Other resources

- 2023-04 GH Discussion - override the pod template and add the affinity for each task (or for each dag via default args).

- Google Cloud Composer has some automation for Airflow’s RBAC (url)